I think the statute of limitations has passed on this story, so why not tell it? When I was a teenager, I think fourteen or fifteen, I got bored one summer day. I didn’t have a license. My mom was taking a nap, so I got it in my head to take the family minivan out for a spin. I was ready for some wheels. I was ready to drive. At least I thought I was.

We lived out in the country, so I was just going to take a quick drive around some of the roads around where we lived. I thought maybe I’d drive by some of the houses where kids I went to school with lived. It was going to be fun.

I got out of our neighborhood, and took a left on the first side road that I came to. Two kids I went to school with lived on that road. It was an old country road with houses on the right side of the road, and some fields and forest on the left side of the road. I drove past the house, up a little hill, and turned around to come back the other way.

As I started back down the hill to go back home, I got the idea to put the van in neutral and coast down the hill. (Editor’s note: Rev, I think Nikola Research could use your talents.) Why? Just impulse. The minivan had an automatic transmission, and the gear shift was down on the floor. I took my gaze away from the road for a split second to look down and shift the gear into neutral. As I did, the front right tire veered slightly off the edge of the road. There was a steep drop off to the edge of this road. Before I realized what was happening, the tire slipped down the edge of the embankment, and I couldn’t get it back.

The van was quickly sucked off the road, and into the edge of a forest. At about 30-40 mph it ran through tree after tree, blowing out windows on the right side of the vehicle. It promptly came to a stop as I had a head on collision with a large oak. I had slowed by this time, and thankfully was uninjured. The van wasn’t.

I climbed up the side of the embankment, and got back on to the street. I wasn’t wearing shoes. I didn’t have a phone. I didn’t even have a wallet on me. I was five miles from home. Thankfully, a stranger drove down the road at the moment I got back up on to the street. She made sure I was ok, and drove me home.

An Introduction to AI

This is the story that came to mind as I sat down to write my first article on artificial intelligence. Are we ready for AI? Or, do we need some more time to develop before we’re ready to safely get behind the wheel of AI?

First, let me say that I am not an expert on artificial intelligence. I have worn many hats in my life, but computer science has never been one of them. I intend to approach this subject as I have all others that I have studied. Look to find multiple experts in the field that I perceive to be:

- Genuine

- Trustworthy

- An expert in the field

- Have differing viewpoints

Then, with an open mind, I listen to what they have to say. I investigate the subject from all angles that interest me. Then, I form my own opinions. In this article my goal is simply to aggregate some of the key issues and data that I have seen so far in the sector. For the bulk of us in the world, I think it will be important to get a handle on the basics of what is going on so that we can better discuss the issues, and prepare for their implications on our lives. Any opinions I share are just that… opinions.

Why pursue AI? What are the benefits? MIT computer science professor Aleksander Madry put it this way: ”Machine learning is changing, or will change, every industry, and leaders need to understand the basic principles, the potential, and the limitations.” A good introductory article for the average investor can be seen here, “Machine Leaning, Explained”

From my perspective, if successful, artificial intelligence could be a key turning point for humanity. Imagine tasking an AGI with curing cancer, resolving conflict, improving education, and helping us to design new technologies that we haven’t even conceived of yet. That’s a big carrot. The benefits may indeed be as large as we can imagine. That is the upside, but what are the risks?

To begin to look into the subject I think it’s important to begin to develop a vocabulary of some of the key issues in the field. Let’s start there.

Basic Terminology

AGI – “Artificial general intelligence (AGI) is the ability of an intelligent agent to understand or learn any intellectual task that human beings or other animals can.” Wikipedia link.

Strong AI vs Weak AI – “Strong AI contrasts with weak AI (or narrow AI), which is not intended to have general cognitive abilities but is designed to solve exactly one problem.” Wikipedia link.

Superintelligence – “A superintelligence is a hypothetical agent that possesses intelligence far surpassing that of the brightest and most gifted human minds. “Superintelligence” may also refer to a property of problem-solving systems (e.g., superintelligent language translators or engineering assistants) whether or not these high-level intellectual competencies are embodied in agents that act in the world.” Wikipedia link.

GPU cluster – “A GPU cluster is a computer cluster in which each node is equipped with a Graphics Processing Unit (GPU). By harnessing the computational power of modern GPUs via General-Purpose Computing on Graphics Processing Units (GPGPU), very fast calculations can be performed with a GPU cluster.” Wikipedia link.

Alignment versus Capabilities

I think about it this way. If you give a powerful AGI system a task to run, how much does it factor in the well-being of yourself and other humans as it works to complete that task? What kind of task? Any task. Whatever the task is, a powerful AGI system will likely be able to offer and implement solutions to achieve goals that we cannot at the moment. However, will it be a danger in the process?

This is a question, and I believe one of the key possible bottlenecks to achieving further, safe progress in AI. How well is the AI system aligned to humanity? Progress in achieving new capabilities for AI has been advancing much faster than progress towards alignment and safety. Wikipedia defines AI Alignment in this way:

“In the field of artificial intelligence (AI), AI alignment research aims to steer AI systems towards their designers’ intended goals and interests. Some definitions of AI alignment require that the AI system advances more general goals such as human values, other ethical principles, or the intentions its designers would have if they were more informed and enlightened. An aligned AI system advances the intended objective; a misaligned AI system is competent at advancing some objective, but not the intended one.”

In this section I want to take a look at what three of the leading experts and decision theorists in the field have to say in their own words. The individuals below are:

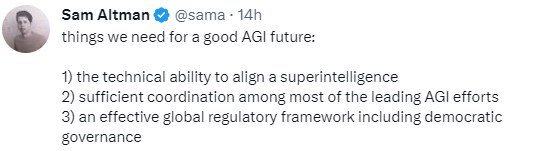

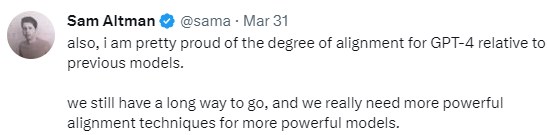

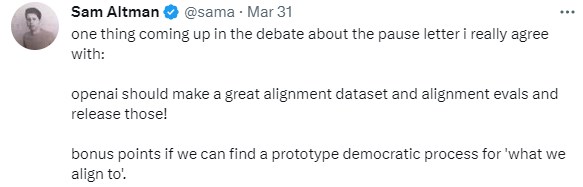

- Sam Altman (CEO, OpenAI)

- Ilay Sutskever (Chief Scientist, OpenAI)

- Eliezer Yudkowsky (Machine Intelligence Research Institute)

In the below Lex Fridman podcast and in his own tweets, Sam Altman discusses OpenAI, AGI, GPT-4, and OpenAI philosophy on AI alignment. OpenAI’s policy on alignment can be viewed here.

.

In the below interview, Ilya Sutskever discusses current progress at OpenAI, the road to AGI, challenges to alignment, and what the future might hold.

In the below Lex Fridman interview, Eliezer Yudkowsky discusses GPT-4, AGI, alignment, and possible risks. You can also read his article in Time magazine last week here, “Pausing AI Development Isn’t Enough. We Need to Shut it All Down.“

Opinions

I support the development of artificial intelligence, and eventually AGI, with the caveat that it must be pursued safely and responsibly for the benefit of all. Work on AI safety is a must, and needed until we can more fully understand the risks of moving towards stronger AI. I am also in favor of bringing broader understanding of artificial intelligence to the public. If the world is about to change, we need to begin to prepare for how to handle that change successfully.

I think the environment that would lead to the best chance for a successful outcome is one of international cooperation. A pause in continued work on AI capabilities would be useful, while some sort of international conference could take place to develop an international framework for the safe development of artificial intelligence.

I am not afraid of the future. Whatever is coming down the pike I want to look straight at it, wide-eyed with understanding.